Google Play Takes Action Against AI Apps Amid Spread of Deepfake Nude Creation Programs

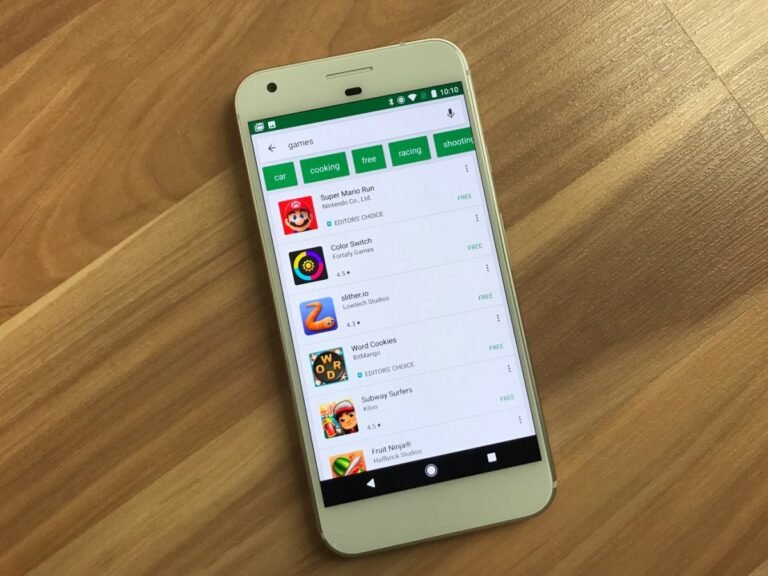

Google on Thursday is issuing new guidance for developers building AI apps distributed through Google Play, in hopes of cutting down on inappropriate and otherwise prohibited content.

Schools across the U.S. are reporting problems with students passing around AI deepfake nudes of other students (and sometimes teachers) for bullying and harassment, alongside other sorts of inappropriate AI content.

Google says that its policies will help to keep out apps from Google Play that feature AI-generated content that can be inappropriate or harmful to users.

It points to its existing AI-Generated Content Policy as a place to check its requirements for app approval on Google Play.

The company is also publishing other resources and best practices, like its People + AI Guidebook, which aims to support developers building AI apps.