In the world of independent writing, Substack has become the go-to platform for its industry-leading newsletter tools. However, recent missteps in content moderation may pose a threat to the platform’s success.

In a November report, The Atlantic revealed that a search on Substack uncovered numerous newsletters promoting white supremacy, neo-Confederacy, and Nazi ideologies. Shockingly, some newsletters even included explicit Nazi imagery, such as swastikas and the black sun symbol, which is commonly used by modern white supremacists. These hateful images were prominently displayed on Substack, including in newsletter logos, something that could have easily been detected by the platform’s algorithmic moderation systems used by traditional social media platforms.

Upon discovering this alarming content on Substack, writers on the platform banded together to address their concerns. In an open letter signed by nearly 250 authors, they demanded an explanation from the company for allowing neo-Nazis and other white supremacists to publish and profit on their platform. The letter asked Substack, “Is platforming Nazis part of your vision of success?” and urged the writers to consider if they wanted to continue supporting a platform that condoned such hateful content.

In response, Substack CEO Hamish McKenzie published a note on the website addressing the mounting concerns about their content moderation policies. He acknowledged that although “we don’t like Nazis either,” Substack would continue to host extremist content and uphold their decentralized approach to moderation, which gives power to readers and writers.

However, by allowing the dissemination of hate, Substack’s CEO overlooks or disregards the impact it has on targeting marginalized individuals. By providing a platform for even a small portion of extremist ideologies, Substack sends a message that this type of content is allowed and accepted.

In an endorsement of the open letter, Margaret Atwood posed a crucial question, “What does ‘Nazi’ mean, or signify?” She noted that among other things, it signifies “Kill all Jews.” Atwood questioned what else the term could mean, as anyone displaying Nazi insignia or claiming the name is essentially advocating for genocide.

Unfortunately, Substack’s lack of expertise and disinterest in content moderation is no surprise. The company’s leadership’s stance and previous controversies have made it evident from the start of its rise in popularity. In an interview with Verge Editor-in-Chief Nilay Patel, Substack CEO Chris Best struggled to articulate responses to questions about content moderation. When pressed on whether they would allow racist extremism to thrive on their platform, Best resorted to a defensive stance and stated that he would not engage in speculation or specific content scenarios.

After facing backlash, McKenzie published a follow-up post correcting their initial response. He stated, “We messed that up,” and made it clear that Substack does not condone bigotry in any form. However, their actions suggest otherwise. The platform has even allowed a monetized newsletter from Unite the Right organizer and prominent white supremacist Richard Spencer. Substack takes a 10% cut of the revenue from writers who monetize their presence on the platform.

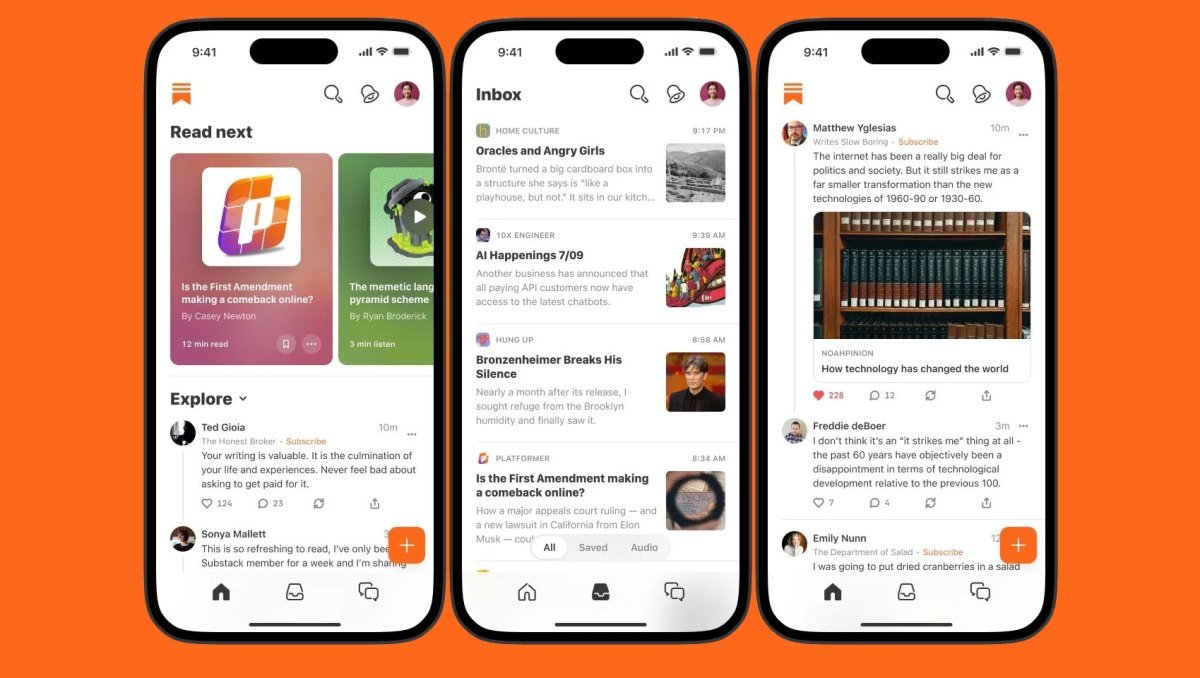

As a result, many Substack authors are at a crossroads. Some have already made the switch to competing platforms, such as Beehiiv, taking their substantial readerships with them. Others, like tech journalist Casey Newton, are calling for Substack to crack down on neo-Nazi content and flagging accounts that violate the company’s rules against inciting violence. Newton, who has closely followed content moderation on traditional social media platforms, argues that Substack’s recommendation algorithms could have the same harmful consequences as those of Twitter, Facebook, and YouTube.

Finally, Substack responded by removing “several publications that endorse Nazi ideology” from Platformer’s list of flagged accounts. However, the company maintains that they will not proactively remove extremist content and instead rely on users to flag such content. This approach is concerning, as it puts the burden on users to do the company’s basic moderation work.

Despite its polished appearance and popular publisher tools, Substack’s lack of serious content moderation may lead to mainstream writers and readers abandoning the platform. It is unfortunate that both writers and readers now have to deal with yet another form of uncertainty in the already unstable publishing world.