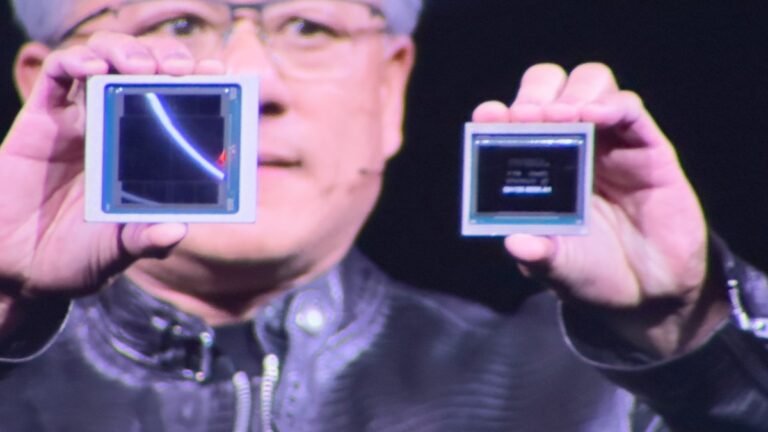

Nvidia chips give graphics-hungry gamers the tools they need to play games in higher resolution, with higher quality and higher frame rates.

Anyone who came to the keynote expecting him to pull a Tim Cook, with a slick, audience-focused keynote, was bound to be disappointed.

The company also introduced Nvidia NIM, a software platform aimed at simplifying the deployment of AI models.

“Anything you can digitize: So long as there is some structure where we can apply some patterns, means we can learn the patterns,” Huang said.

And here we are, in the generative AI revolution.”Catch up on Nvidia’s GTC 2024:Update: This post was updated to include new information and a video of the keynote.

Nvidia chips give graphics-hungry gamers the tools they needed to play games in higher resolution, with higher quality and higher frame rates.

Anyone who had come to the keynote expecting him to pull a Tim Cook, with a slick, audience-focused keynote, was bound to be disappointed.

The company also introduced Nvidia NIM, a software platform aimed at simplifying the deployment of AI models.

NIM leverages Nvidia’s hardware as a foundation and aims to accelerate companies’ AI initiatives by providing an ecosystem of AI-ready containers.

“Anything you can digitize: So long as there is some structure where we can apply some patterns, means we can learn the patterns,” Huang said.

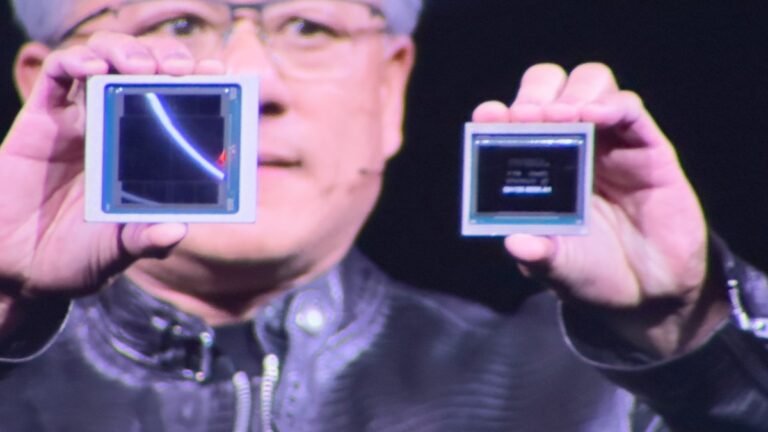

At its GTC conference, Nvidia today announced Nvidia NIM, a new software platform designed to streamline the deployment of custom and pre-trained AI models into production environments.

NIM takes the software work Nvidia has done around inferencing and optimizing models and makes it easily accessible by combining a given model with an optimized inferencing engine and then packing this into a container, making that accessible as a microservice.

Nvidia is already working with Amazon, Google and Microsoft to make these NIM microservices available on SageMaker, Kubernetes Engine and Azure AI, respectively.

Some of the Nvidia microservices available through NIM will include Riva for customizing speech and translation models, cuOpt for routing optimizations and the Earth-2 model for weather and climate simulations.

“Created with our partner ecosystem, these containerized AI microservices are the building blocks for enterprises in every industry to become AI companies.”