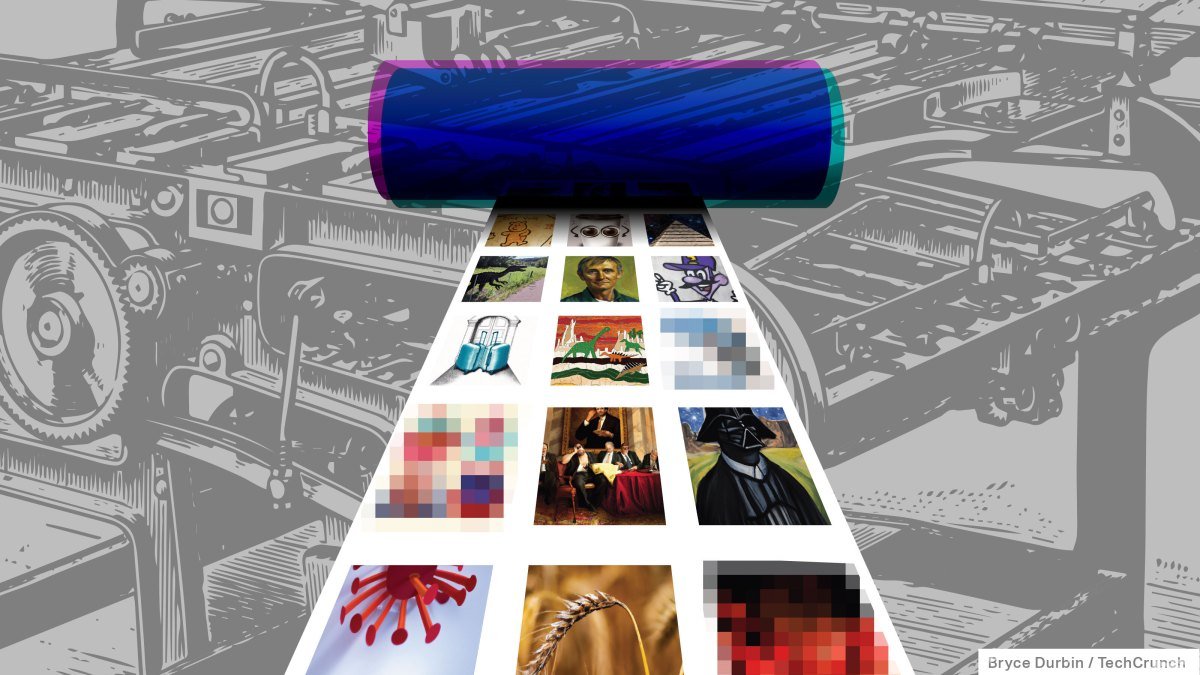

The marvels of GenAI, particularly text-to-image AI models like Midjourney and OpenAI’s DALL-E 3, are truly astonishing. These models have the ability to transform any description into a work of art, ranging from photorealism to cubism. It’s almost as if the art has been created by an actual artist rather than an AI.

However, a pressing issue arises when we consider that many of these models were trained using artwork without the knowledge or consent of its creators. While some companies have started to compensate artists and allow them to “opt out” of model training, many still do not.

In the absence of clear guidelines from the courts and Congress, entrepreneurs and activists have stepped in to provide tools for artists to protect their artwork from being used in GenAI model training. One such tool is Nightshade, which was just released this week. It alters the pixels of an image in a subtle way, tricking the models into perceiving the image as something else entirely. Another tool, Kin.art, utilizes image segmentation and tag randomization to disrupt the model training process.

Kin.art’s tool was officially launched today and was co-developed by Flor Ronsmans De Vry, along with Mai Akiyoshi and Ben Yu, who also co-founded Kin.art, a platform for managing art commissions, a few months ago. According to Ronsmans De Vry, art-generating models are trained on datasets of labeled images to learn the connections between written concepts and images, such as how the word “bird” can represent various types of birds. By “disrupting” either the image or the labels associated with a piece of art, it becomes more difficult for vendors to use that artwork in model training.

“Creating a landscape where traditional art and generative art can coexist has become one of the biggest challenges facing the art industry,” Ronsmans De Vry said in an email to TechCrunch. “We believe it all starts with an ethical approach to AI training, where the rights of artists are respected.”

Ronsmans De Vry claims that Kin.art’s tool for defeating model training is superior to other existing solutions because it does not require expensive cryptographic modifications to images. However, he does note that it can be combined with those methods for additional protection.

“Other tools available to protect against AI training focus on mitigating the damage after your artwork has already been included in the dataset by poisoning it,” Ronsmans De Vry explained. “Our tool prevents your artwork from being included in the first place.”

While the tool itself is free, artists must upload their artwork to Kin.art’s portfolio platform in order to use it. It is likely that the tool will lead artists towards Kin.art’s paid services for finding and facilitating art commissions, which is their primary source of income.

However, Ronsmans De Vry insists that this effort is primarily philanthropic and that Kin.art will eventually make the tool available to third parties.

“After thoroughly testing our solution on our own platform, we plan to offer it as a service to allow any small or large platform to easily protect their data from unauthorized use,” he said. “In the age of AI, owning and being able to defend your platform’s data is crucial. While some platforms have the ability to restrict access to their data, others must offer public-facing services and do not have this luxury. This is where solutions like ours come in.”