After numerous attempts, home robots have yet to gain widespread success after the revolutionary Roomba. Despite efforts to address issues such as pricing, practicality, design, and mapping, the question still remains: what happens when a mistake is made?

The struggle to address this issue also exists in the industrial world, but large companies have the resources and means to handle problems as they arise. However, it is unreasonable to expect consumers to learn programming or hire help every time a problem occurs. Luckily, MIT’s new research shows that this is where large language models (LLMs) can excel in the field of robotics.

“It turns out that robots are excellent mimics,” the school explains. “But unless engineers also program them to adjust to every possible bump and nudge, robots don’t necessarily know how to handle these situations, short of starting their task from the top.”

While imitation learning, which involves learning through observation, is popular for home robots, it lacks the ability to account for the numerous small environmental changes that can disrupt regular functioning. This can lead to a robot having to restart from the beginning. The new research aims to combat this issue by dividing demonstrations into smaller subsets, rather than treating them as a continuous action.

This is where LLMs come in, eliminating the need for individual labeling and assigning of multiple subtasks by the programmer.

“LLMs have a way to tell you how to do each step of a task, in natural language. A human’s continuous demonstration is the embodiment of those steps, in physical space,” says graduate student Tsun-Hsuan Wang. “And we wanted to connect the two, so that a robot would automatically know what stage it is in a task, and be able to replan and recover on its own.”

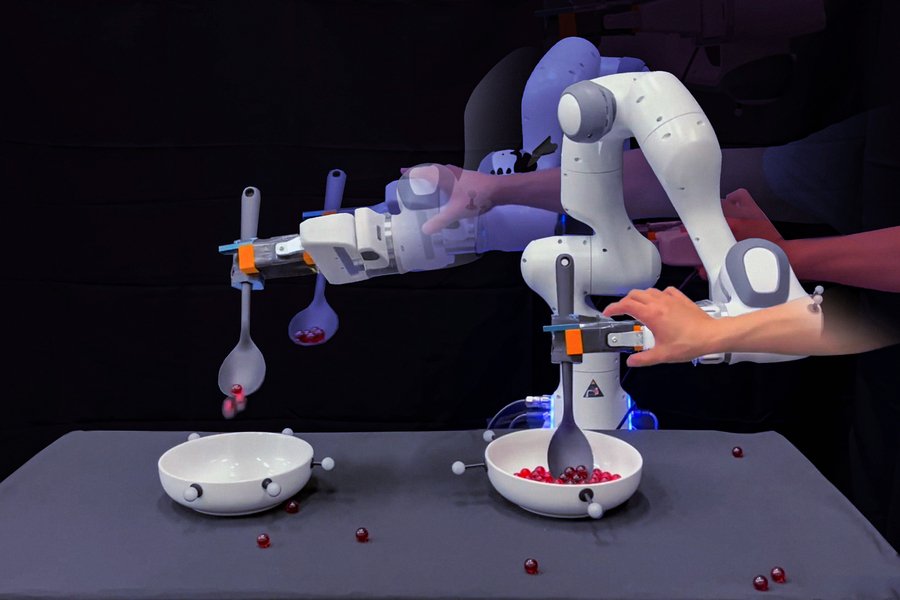

In a particular demonstration featured in the study, researchers trained a robot to scoop marbles and pour them into an empty bowl. While this task may seem simple and repeatable for humans, it involves a combination of various small tasks for robots. The LLMs are capable of listing and labeling these subtasks. To test the system, researchers intentionally disrupted the activity by bumping the robot off course and knocking marbles out of its spoon. The system responded by self-correcting the smaller tasks, rather than starting from the beginning.

“With our method, when the robot is making mistakes, we don’t need to ask humans to program or give extra demonstrations of how to recover from failures,” Wang adds.

Overall, this innovative approach offers a useful solution to avoid losing your marbles when dealing with robotic mistakes.