The world of humanoid robotics has long been focused on designing advanced hardware. However, as developers continue to strive for “general purpose humanoids,” it is crucial to also consider the software aspect. While we have made great strides in creating robots for specific tasks, the transition to more versatile systems is still a major challenge we have yet to conquer.

The quest for a fully adaptable robotic intelligence that can utilize the vast range of movements offered by bipedal humanoid design has been a major topic among researchers. Recently, there has been a surge of interest in the use of generative AI in robotics, with new research from MIT shedding light on how it could impact the development of more advanced systems.

One of the main obstacles in creating general purpose robots is training. When it comes to teaching humans different tasks, we have established methods that work well. But in the world of robotics, these approaches are still fragmented and varied. While there are promising methods like reinforcement and imitation learning, the future of robotics will likely involve a combination of these techniques, enriched by the use of generative AI models.

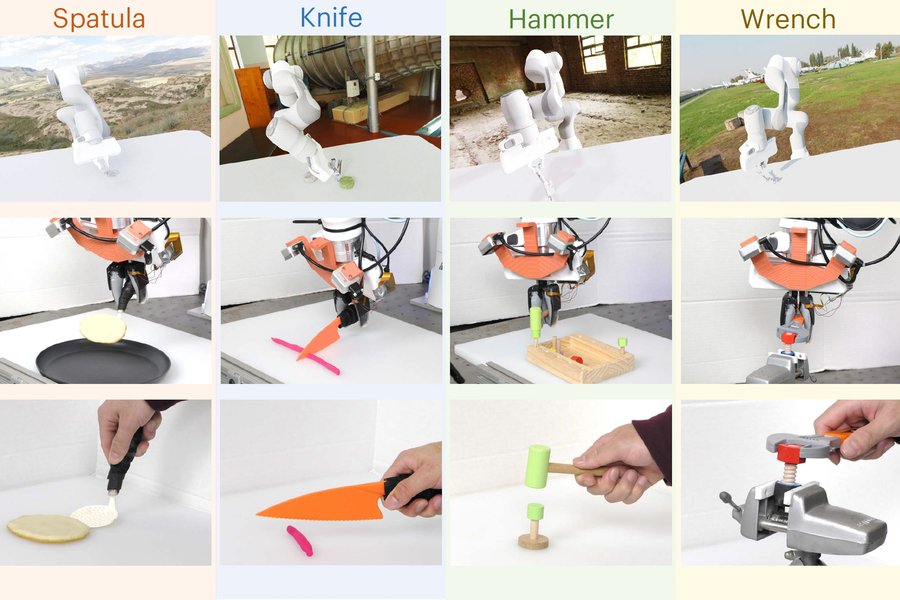

The MIT team’s latest research proposes a method called Policy Composition (PoCo), which involves extracting relevant data from small, task-specific datasets. Examples of these tasks include actions such as hammering a nail and flipping objects with a spatula.

“[Researchers] train a separate diffusion model to learn a strategy, or policy, for completing one task using one specific dataset,” the school notes. “Then they combine the policies learned by the diffusion models into a general policy that enables a robot to perform multiple tasks in various settings.”

The integration of diffusion models has led to a 20% improvement in task performance, including the ability to complete tasks that require multiple tools and adapt to new tasks. By combining relevant information from different datasets, this system can develop a sequence of actions necessary to complete a task.

Lead author of the paper, Lirui Wang, explains the benefits of this approach, stating, “One of the benefits of this approach is that we can combine policies to get the best of both worlds. For instance, a policy trained on real-world data might be able to achieve more dexterity, while a policy trained on simulation might be able to achieve more generalization.”

The ultimate goal of this research is to create intelligent systems that allow robots to switch between different tools to perform various tasks. The widespread use of multi-purpose systems would bring us closer to achieving the dream of a truly general purpose robot.