Apple announced a deal with OpenAI to bring ChatGPT, OpenAI’s AI-powered chatbot experience, to a range of its devices.

Here’s a roundup of a few of the more noteworthy Apple AI announcements from WWDC 2024.

New SiriSiri got a makeover courtesy of Apple’s overarching generative AI push this year, called Apple Intelligence.

To take advantage of the new Siri, you’ll need an Apple device that supports Apple Intelligence — specifically the iPhone 15 Pro and devices with M1 or newer chips.

Apple Intelligence will have an understanding of who you’re chatting with, Apple says — so if you want to personalize the chat with a custom AI image, you can do so on the fly.

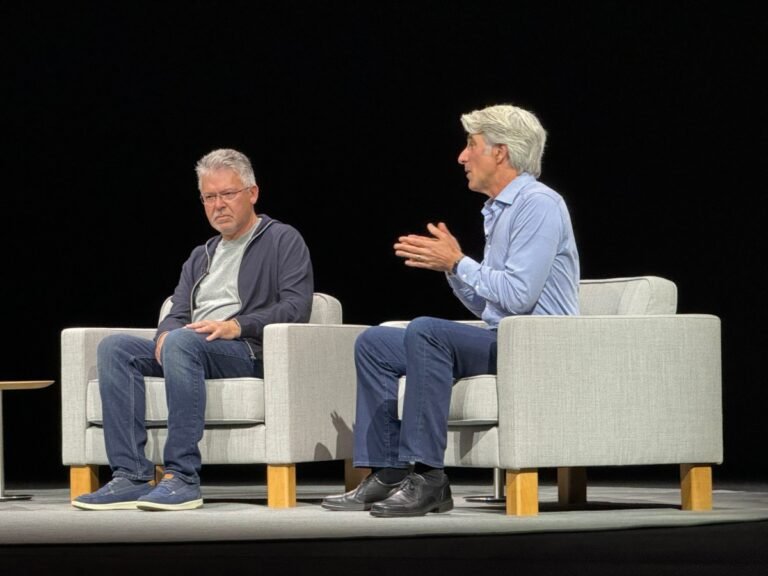

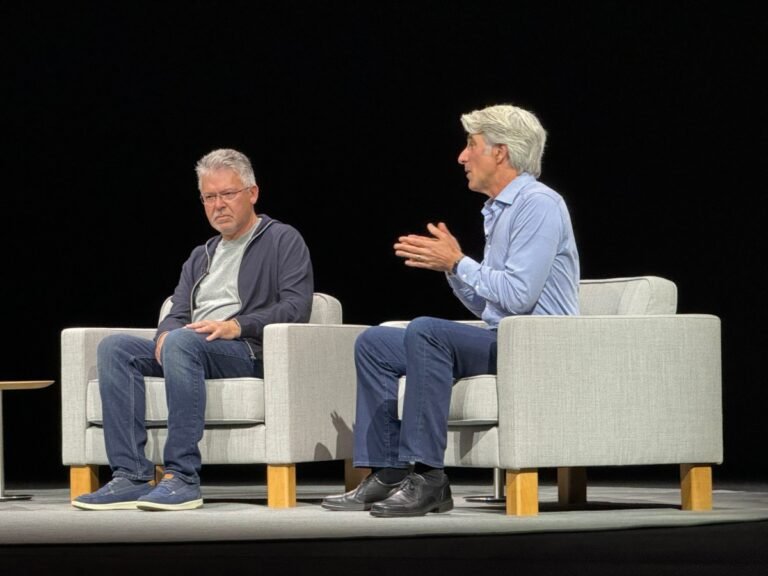

Following a keynote presentation at WWDC 2024 that both introduced Apple Intelligence and confirmed a partnership that brings GPT access to Siri through an deal with OpenAI, SVP Craig Federighi confirmed plans to work with additional third-party models.

The first example given by the executive was one the companies with which Apple was exploring a partnership.

“We’re looking forward to doing integrations with other models, including Google Gemini, for instance, in the future,” Federighi said during a post-keynote conversation.

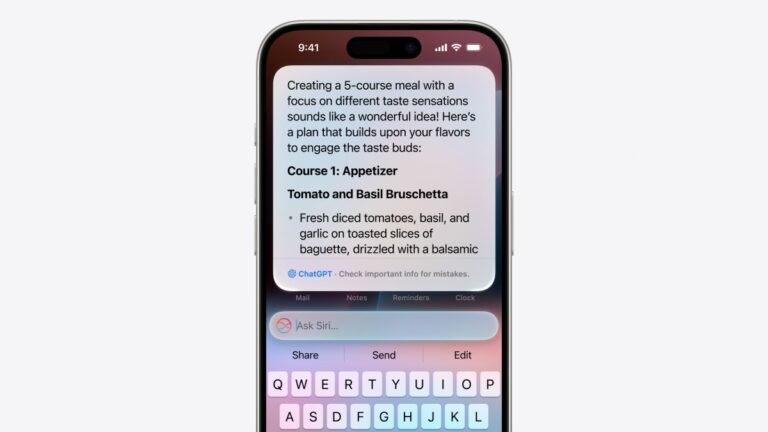

Apple says users will be able to access the system without having to sign up for an account or paying for premium services.

“Now you can do it right through Siri, without going through another tool,” the Apple executive said.

Elon Musk is threatening to ban iPhones from all his companies over the newly announced OpenAI integrations Apple announced at WWDC 2024 on Monday.

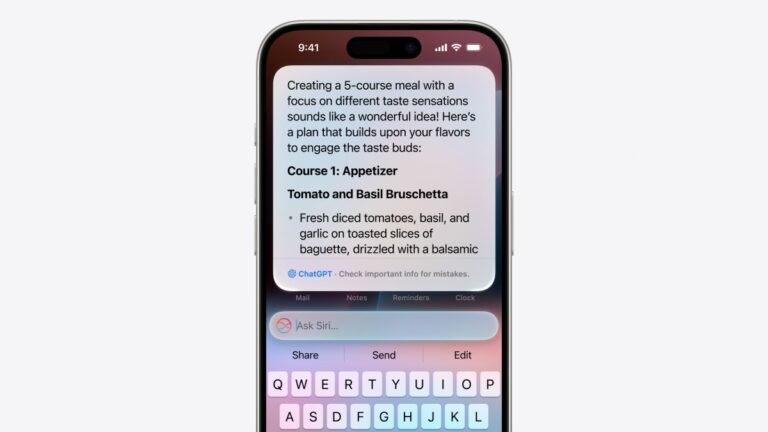

This allows users to get an answer from ChatGPT without having to open the ChatGPT iOS app.

Apple also announced another integration that would allow users have access to ChatGPT system-wide within Writing Tools via a “compose” feature.

That’s great news for OpenAI, which will soon have a massive influx of requests from Apple users.

Apple users may not understand the nuances of the privacy issues here, of course — which is what Musk is counting on by making these complaints.

Apple is bringing ChatGPT, OpenAI’s AI-powered chatbot experience, to Siri and other first-party apps and capabilities across its operating systems.

You can include photos with the questions you ask ChatGPT via Siri, or ask questions related to your docs or PDFs.

Apple’s also integrated ChatGPT into system-wide writing tools like Writing Tools, which lets you create content with ChatGPT — including images — or ask an initial idea and send it to ChatGPT to get a revision or variation back.

ChatGPT integrations will arrive on iOS 18, iPadOS 18, and MacOS Sequoia later this year, Apple says, and will be free without the need to create a ChatGPT or OpenAI account.

Subscribers to one of OpenAI’s ChatGPT premium plans will be able to access paid features within Siri and Apple’s other apps with ChatGPT integrations.

How many AI models is too many?

We’re seeing a proliferation of models large and small, from niche developers to large, well-funded ones.

And let’s be clear, this is not all of the models released or previewed this week!

Other large language models like LLaMa or OLMo, though technically speaking they share a basic architecture, don’t actually fill the same role.

The other side of this story is that we were already in this stage long before ChatGPT and the other big models came out.

The most notable bit of today’s news, however, is probably Nothing’s embrace of ChatGPT this time out.

Think Siri/Google Assistant/Alexa-style access on a pair of earbuds, only this one taps directly into OpenAI’s wildly popular platform.

Nothing says the Ear buds bring improved sound over their predecessors, courtesy of a new driver system.

A “smart” active noise-canceling system adapts accordingly to environmental noise and checks for “leakage” between the buds and the ear canal.

The Ear and Ear (a) are both reasonably priced at $149 and $99, respectively.

ChatGPT, OpenAI’s viral AI-powered chatbot, just got a big upgrade.

OpenAI announced today that premium ChatGPT users — customers paying for ChatGPT Plus, Team or Enterprise — can now leveraged an updated and enhanced version of GPT-4 Turbo, one of the models that powers the conversational ChatGPT experience.

It was trained on publicly available data up to December 2023, in contrast to the previous edition of GPT-4 Turbo available in ChatGPT, which had an April 2023 cut-off.

“When writing with ChatGPT [with the new GPT-4 Turbo], responses will be more direct, less verbose and use more conversational language,” OpenAI writes in a post on X.

Our new GPT-4 Turbo is now available to paid ChatGPT users.

OpenAI is making its flagship conversational AI accessible to everyone, even people who haven’t bothered making an account.

Instead, you’ll be dropped right into conversation with ChatGPT, which will use the same model as logged-in users.

You can chat to your heart’s content, but be aware you’re not getting quite the same set of features that folks with accounts are.

You won’t be able to save or share chats, use custom instructions, or other stuff that generally has to be associated with a persistent account.

OpenAI offers this helpful gif:More importantly, this extra-free version of ChatGPT will have “slightly more restrictive content policies.” What does that mean?

OpenAI’s legal battle with The New York Times over data to train its AI models might still be brewing.

But OpenAI’s forging ahead on deals with other publishers, including some of France’s and Spain’s largest news publishers.

OpenAI on Wednesday announced that it signed contracts with Le Monde and Prisa Media to bring French and Spanish news content to OpenAI’s ChatGPT chatbot.

So, OpenAI’s revealed licensing deals with a handful of content providers at this point.

The Information reported in January that OpenAI was offering publishers between $1 million and $5 million a year to access archives to train its GenAI models.

Covariant is building ChatGPT for robots The UC Berkeley spinout says its new AI platform can help robots think more like peopleCovariant this week announced the launch of RFM-1 (Robotics Foundation Model 1).

“We at Covariant have already deployed lots of robots at warehouses with success.

“We do like a lot of the work that is happening in the more general purpose robot hardware space,” says Chen.

“ChatGPT for robots” isn’t a perfect analogy, but it’s a reasonable shorthand (especially in light of the founders’ connection to OpenAI).

Chen says the company expects the new RFM-1 platform will work with a “majority” of the hardware on which Covariant software is already deployed.