This week in AI, Apple stole the spotlight.

At the company’s Worldwide Developers Conference (WWDC) in Cupertino, Apple unveiled Apple Intelligence, its long-awaited, ecosystem-wide push into generative AI.

The company promised Apple Intelligence is being built with safety at its core, along with highly personalized experiences.

Apple revealed in a blog post that it trains the AI models that power Apple Intelligence on a combination of licensed datasets and the public web.

Grab bagThis week marked the sixth anniversary of the release of GPT-1, the progenitor of GPT-4o, OpenAI’s latest flagship generative AI model.

Now rebranded as Mosaic AI, the platform has become integral to Databricks’ AI solutions.

Today, at the company’s Data + AI Summit, it is launching a number of new features for the service.

Databricks is launching five new Mosaic AI tools at its conference: Mosaic AI Agent Framework, Mosaic AI Agent Evaluation, Mosaic AI Tools Catalog, Mosaic AI Model Training, and Mosaic AI Gateway.

And we’ve also found in our internal AI applications, like the assistant applications for our platform, that this is the way to build them,” he said.

But when you really pushed people, they were using Open AI.

It’s something Apple is striving to answer with its own take on the category, Apple Intelligence, which was officially unveiled this week at WWDC 2024.

Apple Intelligence is a more bespoke approach to generative AI, built specifically with the company’s different operating systems at their foundation.

It’s a very Apple approach in the sense that it prioritizes a frictionless user experience above all.

The operating systems also feature a feedback mechanism into which users can report issues with the generative AI system.

This should function the same with all external models Apple partners with, including Google Gemini.

Jordan Meyer and Mathew Dryhurst founded Spawning AI to create tools that help artists exert more control over how their works are used online.

Meyer claims that, despite the fact that it’s substantially smaller than some other generative AI training data sets out there, Source.Plus’ data set is already “high-quality” enough to train a state-of-the-art image-generating model.

Generative AI models “learn” to produce their outputs (e.g., photorealistic art) by training on a vast quantity of relevant data — images, in that case.

Image Credits: Spawning“Source.Plus isn’t just a repository for training data; it’s an enrichment platform with tools to support the training pipeline,” he continued.

And, Meyer says, Spawning might build its own generative AI models using data from the Source.Plus datasets.

Mistral AI has closed its much rumored Series B funding round with General Catalyst leading the round.

The company has secured €600 million (around $640 million at today’s exchange rate) in a mix of equity and debt.

As a reminder, Mistral AI is a relatively new entrant in the artificial intelligence space.

It also has distribution partnerships with cloud providers, such as Microsoft Azure — Microsoft is also a minor shareholder in Mistral AI.

According to the Financial Times, Mistral AI raised €468 million in equity and €132 million in debt (around $500 million and $140 million respectively).

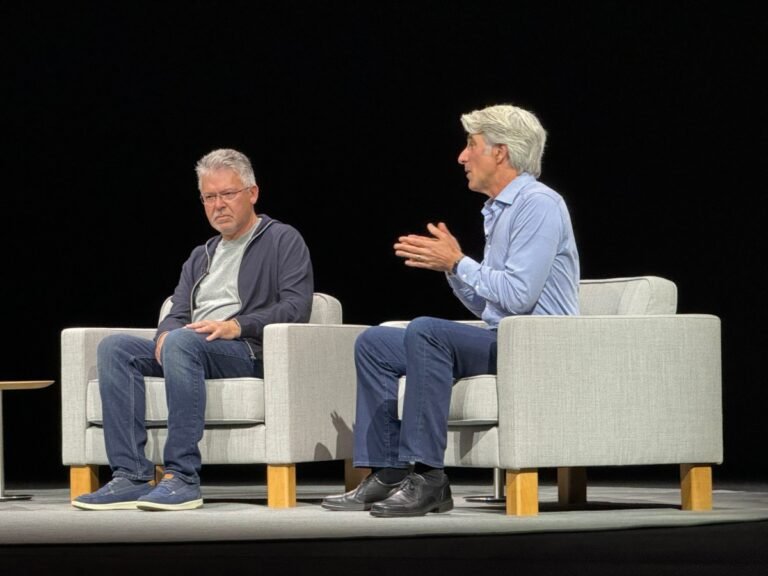

Following a keynote presentation at WWDC 2024 that both introduced Apple Intelligence and confirmed a partnership that brings GPT access to Siri through an deal with OpenAI, SVP Craig Federighi confirmed plans to work with additional third-party models.

The first example given by the executive was one the companies with which Apple was exploring a partnership.

“We’re looking forward to doing integrations with other models, including Google Gemini, for instance, in the future,” Federighi said during a post-keynote conversation.

Apple says users will be able to access the system without having to sign up for an account or paying for premium services.

“Now you can do it right through Siri, without going through another tool,” the Apple executive said.

In a research note, HSBC estimates that the Indian edtech giant, once valued at $22 billion, is now worth nothing.

The write-down in its estimation makes Byju’s one of the most spectacular startup slides in recent memory and follows a very rough year for what was India’s most valuable startup not long ago.

After raising $100 million, AI mortgage startup LoanSnap is facing an avalanche of lawsuits and has been evicted from its main office.

At least seven creditors, including Wells Fargo, have collectively alleged that the company owes them more than $2 million.

Read MoreTurns out, AI models have favorite numbers: Engineers at Gramener performed an informal experiment where they asked several major LLM chatbots to pick a random number between 0 and 100.

The subject has been a massive question mark looming over Cupertino for the last few years, as competitors like Google and Microsoft have embraced generative AI.

Apple’s near-term strategy is a deep integration between existing properties and generative AI, with Siri at the center.

Rather than replacing Assistant outright, Google has been integrating its generative AI platform into different applications.

Smart speakers have a broader bellwether for platforms like Siri, Alexa and Google Assistant.

Other people do it well.”The company’s approach to generative AI is currently in the same place.

Only a few years ago, one of the hottest topics in enterprise software was ‘robotic process automation’ (RPA).

The rise of generative AI, however, may just be the missing key to building these kinds of systems.

“Last year, generative AI happened and I realized that it unlocks some software scenarios that were impossible before,” Surpatanu said.

You have to combine it with more traditional software if you want to squeeze the best out of it,” he said.

Generative AI, Surpatanu argues, can bring a degree of adaptability to context and an understanding of the user’s intent to these systems that wasn’t really possible before and something that RPA often struggles with.

Not all generative AI models are created equal, particularly when it comes to how they treat polarizing subject matter.

They found that the models tended to answer questions inconsistently, which reflects biases embedded in the data used to train the models, they say.

“Our research shows significant variation in the values conveyed by model responses, depending on culture and language.”Text-analyzing models, like all generative AI models, are statistical probability machines.

Instrumental to an AI model’s training data are annotations, or labels that enable the model to associate specific concepts with specific data (e.g.

Other studies have examined the deeply ingrained political, racial, ethnic, gender and ableist biases in generative AI models — many of which cut across languages, countries and dialects.