The vulnerability is a new one, resulting from the increased “context window” of the latest generation of LLMs.

But in an unexpected extension of this “in-context learning,” as it’s called, the models also get “better” at replying to inappropriate questions.

So if you ask it to build a bomb right away, it will refuse.

But if you ask it to answer 99 other questions of lesser harmfulness and then ask it to build a bomb… it’s a lot more likely to comply.

If the user wants trivia, it seems to gradually activate more latent trivia power as you ask dozens of questions.

In its early days, OctoAI focused almost exclusively on optimizing models to run more effectively.

With the rise of generative AI, the team then launched the fully managed OctoAI platform to help its users serve and fine-tune existing models.

OctoStack, at its core, is that OctoAI platform, but for private deployments.

Deploying OctoStack should be straightforward for most enterprises, as OctoAI delivers the platform with read-to-go containers and their associated Helm charts for deployments.

For developers, the API remains the same, no matter whether they are targeting the SaaS product or OctoAI in their private cloud.

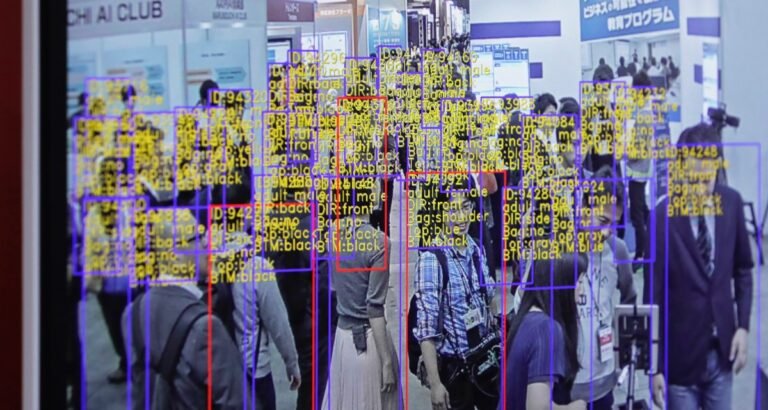

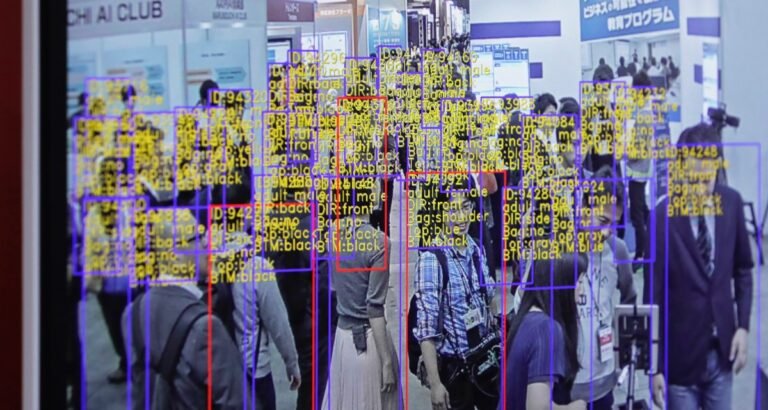

This week in AI, I’d like to turn the spotlight on labeling and annotation startups — startups like Scale AI, which is reportedly in talks to raise new funds at a $13 billion valuation.

Labeling and annotation platforms might not get the attention flashy new generative AI models like OpenAI’s Sora do.

Without them, modern AI models arguably wouldn’t exist.

Labels, or tags, help the models understand and interpret data during the training process.

Some of the tasks on Scale AI take labelers multiple eight-hour workdays — no breaks — and pay as little as $10.

X.ai, Elon Musk’s AI startup, has revealed its latest generative AI model, Grok-1.5.

Grok-1.5 benefits from “improved reasoning,” according to X.ai, particularly where it concerns coding and math-related tasks.

One improvement that should lead to observable gains is the amount of context Grok-1.5 can take in compared to Grok-1.

Context, or context window, refers to input data (in this case, text) that a model considers before generating output (more text).

The announcement of Grok-1.5 comes after X.ai open sourced Grok-1, albeit without the code necessary to fine-tune or further train it.

Increasingly, the AI industry is moving toward generative AI models with longer contexts.

Ori Goshen, the CEO of AI startup AI21 Labs, asserts that this doesn’t have to be the case — and his company is releasing a generative model to prove it.

Contexts, or context windows, refer to input data (e.g.

Trained on a mix of public and proprietary data, Jamba can write text in English, French, Spanish and Portuguese.

Loads of freely available, downloadable generative AI models exist, from Databricks’ recently released DBRX to the aforementioned Llama 2.

You could spend it training a generative AI model.

See Databricks’ DBRX, a new generative AI model announced today akin to OpenAI’s GPT series and Google’s Gemini.

Customers can privately host DBRX using Databricks’ Model Serving offering, Rao suggested, or they can work with Databricks to deploy DBRX on the hardware of their choosing.

It’s an easy way for customers to get started with the Databricks Mosaic AI generative AI tools.

And plenty of generative AI models come closer to the commonly understood definition of open source than DBRX.

Adobe today announced Firefly Services, a set of over 20 new generative and creative APIs, tools and services.

Firefly Services makes some of the company’s AI-powered features from its Creative Cloud tools like Photoshop available to enterprise developers to speed up content creation in their custom workflows — or create entirely new solutions.

In addition, the company also today launched Custom Models, which allows businesses to fine tune Firefly models based on their assets.

Custom Models is already built into Adobe’s new GenStudio.

In addition to these AI features, Firefly Services also exposes tools for editing text layers, tagging content and applying presets from Lightroom, for example.

Brands want to use generative AI to personalize their marketing efforts — but they are also deathly afraid of AI going off message and ruining their brand.

At its annual Summit conference in Las Vegas, Adobe today announced GenStudio, a new application that helps brands create content and measure its performance, with generative AI — and the promise of brand safety — at its center.

Adobe wants GenStudio, which it first previewed last September, to be an end-to-end solution to help marketers tailor their content to different channels and audience segments.

That, of course, is where generative AI comes in, since it can speed up content creation dramatically.

The tools also continuously checks that anything a user creates in GenStudio is within a brand’s guidelines.

Just about everyone is trying to get a piece of the generative AI action these days.

While lacking the brand name recognition of some of these other players, it boasts the largest open source model API with over 12,000 users, per the company.

That kind of open source traction tends to attract investor attention, and the company has raised $25 million so far.

“It can be either off the shelf, open source models or the models we tune or the models our customer can tune by themselves.

Being an API, developers can plug it into their application, bring their model of choice trained on their data, and add generative AI capabilities like asking questions very quickly.

Even when some or all of those are addressed, there remains the question of what happens when a system makes an inevitable mistake.

We can’t, however, expect consumers to learn to program or hire someone who can help any time an issue arrives.

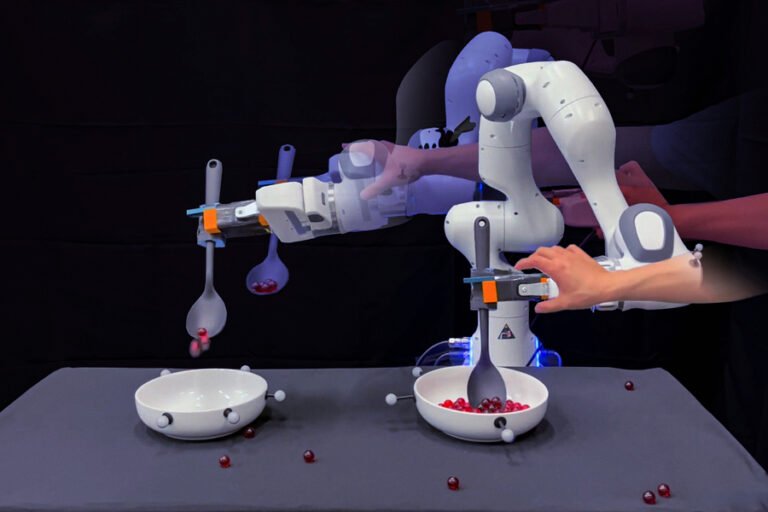

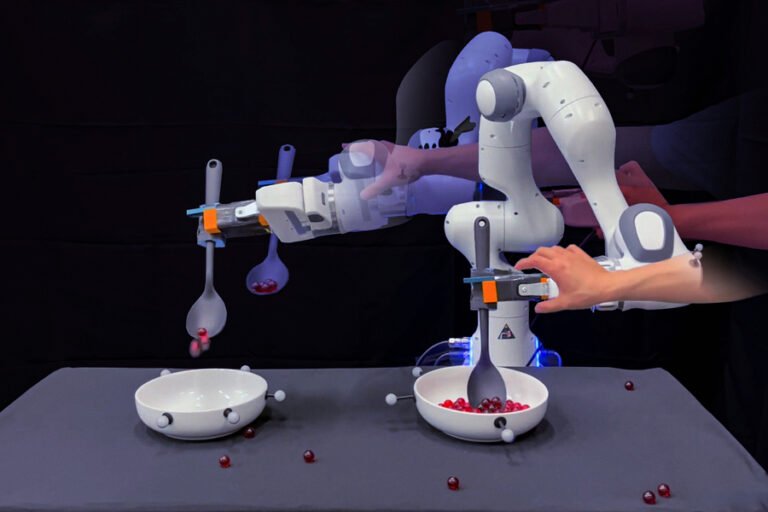

Thankfully, this is a great use case for LLMs (large language models) in the robotics space, as exemplified by new research from MIT.

“LLMs have a way to tell you how to do each step of a task, in natural language.

It’s a simple, repeatable task for humans, but for robots, it’s a combination of various small tasks.