As GitLab plans to further integrate AI into their security features, this will help developers protect themselves from potential vulnerabilities in their code. By using a large language model, vunerabilities will be explained to developers in a way that is easy to understand, and if an issue is detected, AI will then automatically resolve it. This could help improve the security of GitLab installations worldwide.

Code comprehension has long been a challenge for developers, and GitLab is continuing to explore potential solutions by introducing new experimental features. With the new code summary feature, users can quickly see what a particular line of code does and how it affects the overall structure of the program. This could make onboarding new developers significantly easier, as they would not need to comb through lengthy lines of code in order to understand its purpose. Additionally, GitLab’s recent announcement of a similar tool that explains machine codes to developers could be useful for those with more experience in programming languages. By providing an easy-to-understand visual representation of complex code, this feature may make deciphering errors more straightforward for even experienced programmers.

GitLab is a software development platform offering Git, Source Control, Issue Tracking and Collaborative Development Tools.

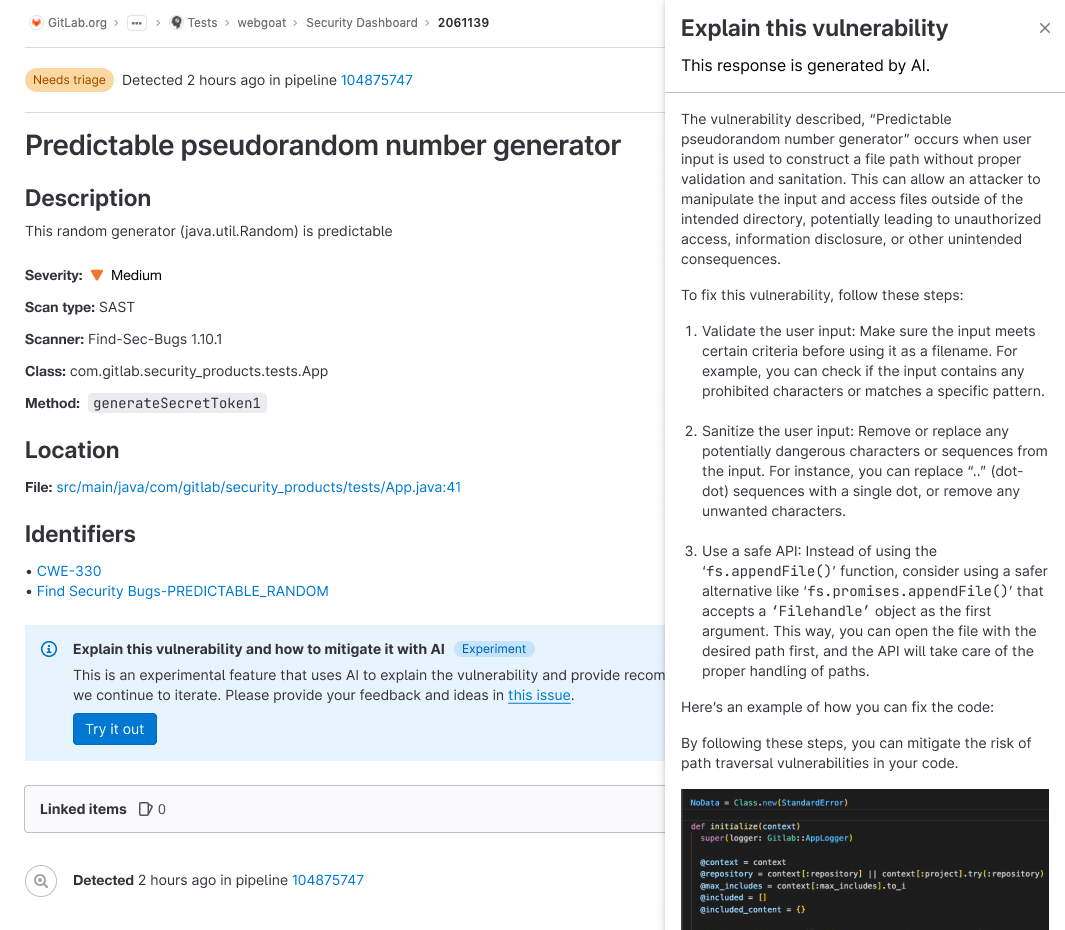

The “explain this vulnerability” feature of the code analysis tool can be a valuable tool for remediation. Combining information about the vulnerability with insights from the user’s codebase makes it easier and faster to fix these issues. By providing this context, teams can better understand how the issue affects their codebase and make informed decisions about how to address it.

According to the company’s philosophy, velocity with guardrails ensures that any AI features added to a system are safe and compliant. This is done through the combination of AI code and test generation, as well as the company’s full-stack DevSecOps platform.

Within the GitLab corporate culture, privacy is of utmost importance to the team. Not only will they not send customer data outside of their own organization, but they also ensure that all of their AI features are built with this main concern in mind. This ensures that customers’ private information is never accessed or used in any way by GitLab.

GitLab’s AI initiative is aimed at improving the efficiency of the entire development process, from individual developers to the whole development lifecycle.Although it can be tempting to focus on boosting productivity for individual developers, inefficiencies further downstream in reviewing and putting code into production can quickly negate any gains. GitLab is working on AI-powered tools to help ease these processes and achieve greater overall efficiencies.

In order to make its software more secure, Lockheed Martin has invested in a more efficient development process. This has resulted in a 20% increase in the effectiveness of the software, which has been felt by the company as a whole.

Adding features to the “explain this code” feature helps QA and security teams better understand the code and vulnerabilities. As the team behind GitLab expands this feature to also explain vulnerabilities, they aim to build features that will help automate unit tests and security reviews.

By using AI and ML in their testing efforts, developers are able to catch issues and make sure their code is error-free before it reaches the hands of a code reviewer. This saves both time and resources, as reviewers can focus on more important tasks instead of hunting for coding errors.

The DevSecOps Platform from GitLab helps automate the process of enforcing compliance frameworks, performing security tests, and providing AI assisted recommendations to free up team members to focus on more important tasks. This enables teams to manage their resources more efficiently while maintaining a high level of quality assurance.