Human Native AI is a London-based startup building a marketplace to broker such deals between the many companies building LLM projects and those willing to license data to them.

Human Native AI also helps rights holders prepare and price their content and monitors for any copyright infringements.

Human Native AI takes a cut of each deal and charges AI companies for its transaction and monitoring services.

Human Native AI announced a £2.8 million seed round led by LocalGlobe and Mercuri, two British micro VCs, this week.

It is also a smart time for Human Native AI to launch.

The company on Wednesday introduced a beta of what it’s calling ‘AI teammates,’ in a bid to help move work inside an organization.

“We believe that the future of work is humans not just working with humans, but humans also working with AI,” Costello told TechCrunch.

“The work graph enables us to tell AI not just how work happens, but how work happens in this specific instance.

So when we embed AI teammates into a particular workflow, they’re given a specific job to do.

“We have found that we’re able to embed AI teammates to remove a lot of administrative work and tracking work within these systems very quickly, with high degrees of success.

In the short term, many employers have complained of an inability to fill roles and retain workers, further accelerating robotic adoption.

One aspect of the conversation that is oft neglected, however, is how human workers feel about their robotic colleagues.

But could the technology also have a negative impact on worker morale?

The institute reports a negative impact to worker-perceived meaningfulness and autonomy levels.

As long as robots have a positive impact on a corporation’s bottom line, adoption will continue at a rapidly increasing clip.

Seven Waymo robotaxis blocked traffic moving onto the Potrero Avenue 101 on-ramp in San Francisco on Tuesday at 9:30 p.m., according to video of the incident posted to Reddit and confirmation from Waymo.

California regulators recently approved Waymo to operate its autonomous robotaxi service on San Francisco freeways without a human driver, but the company is still only testing on freeways with a human driver in the front seat.

After hitting the road closure, the first Waymo vehicle in the lineup then pulled over out of the traffic lane that was blocked by cones, followed by six other Waymo robotaxis.

It’s not the first time Waymo vehicles have caused a road blockage, but this is the first documented incident involving a freeway.

In San Francisco, there must be a driver in the car in order to issue a citation.

It is, after all, a lot easier to generate press for robots that look and move like humans.

For a while now, Collaborative Robotics founder Brad Porter has eschewed robots that look like people.

As the two-year-old startup’s name implies, Collaborative Robotics (Cobot for short) is interested in the ways in which humans and robots will collaborate, moving forward.

When his run with the company ended in summer 2020, he was leading the retail giant’s industrial robotics team.

AI will, naturally, be foundational to the company’s promise of “human problem solving,” while the move away from the humanoid form factor is a bid, in part, to reduce the cost of entry for deploying these systems.

Even when some or all of those are addressed, there remains the question of what happens when a system makes an inevitable mistake.

We can’t, however, expect consumers to learn to program or hire someone who can help any time an issue arrives.

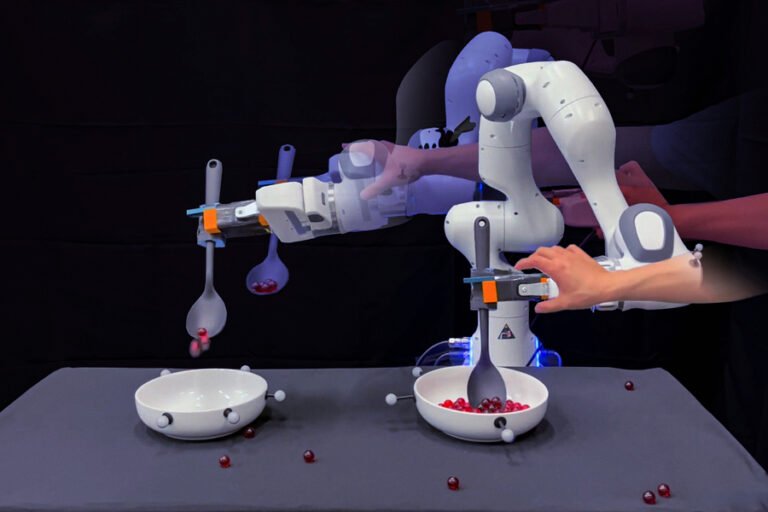

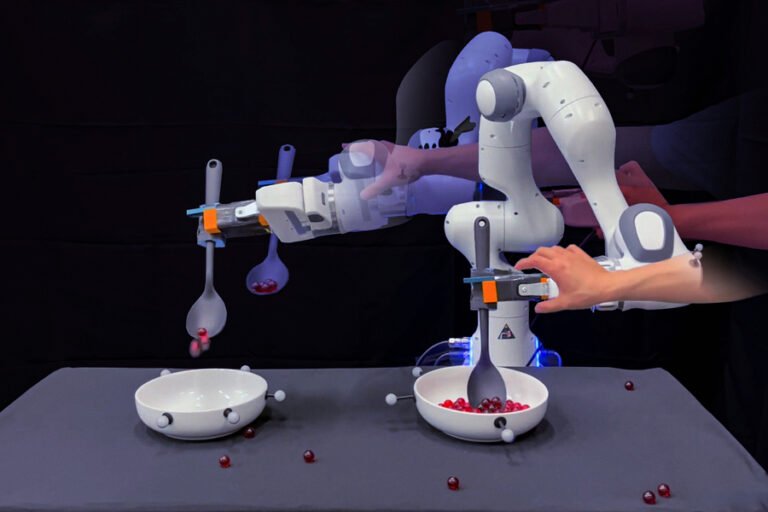

Thankfully, this is a great use case for LLMs (large language models) in the robotics space, as exemplified by new research from MIT.

“LLMs have a way to tell you how to do each step of a task, in natural language.

It’s a simple, repeatable task for humans, but for robots, it’s a combination of various small tasks.

So in response, Google — thousands of jobs lighter than it was last fiscal quarter — is funneling investments toward AI safety.

This morning, Google DeepMind, the AI R&D division behind Gemini and many of Google’s more recent GenAI projects, announced the formation of a new organization, AI Safety and Alignment — made up of existing teams working on AI safety but also broadened to encompass new, specialized cohorts of GenAI researchers and engineers.

But it did reveal that AI Safety and Alignment will include a new team focused on safety around artificial general intelligence (AGI), or hypothetical systems that can perform any task a human can.

The AI Safety and Alignment organization’s other teams are responsible for developing and incorporating concrete safeguards into Google’s Gemini models, current and in-development.

One might assume issues as grave as AGI safety — and the longer-term risks the AI Safety and Alignment organization intends to study, including preventing AI in “aiding terrorism” and “destabilizing society” — require a director’s full-time attention.

After serving as an AI policy manager at Zillow for nearly a year, she joined Hugging Face as the head of global policy.

Her responsibilities there range from building and leading company AI policy globally to conducting socio-technical research.

Solaiman also advises the Institute of Electrical and Electronics Engineers (IEEE), the professional association for electronics engineering, on AI issues, and is a recognized AI expert at the intergovernmental Organization for Economic Co-operation and Development (OECD).

Irene Solaiman, head of global policy at Hugging FaceBriefly, how did you get your start in AI?

The means by which we improve AI safety should be collectively examined as a field.

Much progress has been made since then, and 2024 — the 20th anniversary of Ghostrider — will be another seminal year for autonomous vehicles, especially for off-road industries.

The possibilities off-roadSafety remains the paramount metric for autonomous vehicle deployment, yet there has to be industry consensus on how to adequately measure a robotic or human driver’s safety.

For commercial operations, there is another compelling reason for deploying autonomous vehicles in these conditions: increased profits.

Safety remains a concernSafety remains the paramount metric for autonomous vehicle deployment, yet there has to be industry consensus on how to adequately measure a robotic or human driver’s safety.

To increase transparency, the California DMV logs autonomous vehicle collisions.

ImpriMed, a California-based precision medicine startup, builds AI-powered dog cancer treatment technology that helps veterinarians identify the most suitable drugs for individual canine and feline blood cancers.

The startup, which centers on improving treatment outcomes of dogs and cats with cancer first, now aims to expand its precision medicine technology for human oncology applications.

“Also, the proven know-how acquired from developing AI algorithms in veterinary oncology streamlines the building of new predictive models in human oncology.

For human precision oncology, its AI software for multiple myeloma, a rare blood cancer, is in the process of approval, aiming to commercialize in 2025, Lim told TechCrunch.

ImpriMed’s unique strength is “the ability to develop and incorporate AI models into the personalized medicine service workflow,” according to Lim.