Twitch has come up with a solution for the ongoing copyright issues that DJs encounter on the platform.

Participating DJs in the program must contribute a percentage of their earnings from streams to cover some of the music rights costs.

Twitch partnered with all major labels to bring a majority of popular music to the offering, including Universal Music Group, Warner Music Group, and Sony Music, as well as several independent labels represented by music licensing partner Merlin.

Additionally, a one-year subsidy is being offered to existing Twitch DJs, providing financial support and a transition period to adapt to the new program.

Twitch will soon require DJs to share part of their revenue with the music industry.

Meta has announced changes to its rules on AI-generated content and manipulated media following criticism from its Oversight Board.

So, for AI-generated or otherwise manipulated media on Meta platforms like Facebook and Instagram, the playbook appears to be: more labels, fewer takedowns.

“Our ‘Made with AI’ labels on AI-generated video, audio and images will be based on our detection of industry-shared signals of AI images or people self-disclosing that they’re uploading AI-generated content,” said Bickert, noting the company already applies ‘Imagined with AI’ labels to photorealistic images created using its own Meta AI feature.

Meta’s blog post highlights a network of nearly 100 independent fact-checkers which it says it’s engaged with to help identify risks related to manipulated content.

These external entities will continue to review false and misleading AI-generated content, per Meta.

India, grappling with election misinfo, weighs up labels and its own AI safety coalition An Adobe-backed association wants to help organizations in the country with an AI standardIndia, long in the tooth when it comes to co-opting tech to persuade the public, has become a global hotspot when it comes to how AI is being used, and abused, in political discourse, and specifically the democratic process.

Tech companies, who built the tools in the first place, are making trips to the country to push solutions.

Using its open standard, the C2PA has developed a digital nutrition label for content called Content Credentials.

It also automatically attaches to AI content generated by Adobe’s AI model Firefly.

“That’s a little ‘CR’… it’s two western letters like most Adobe tools, but this indicates there’s more context to be shown,” he said.

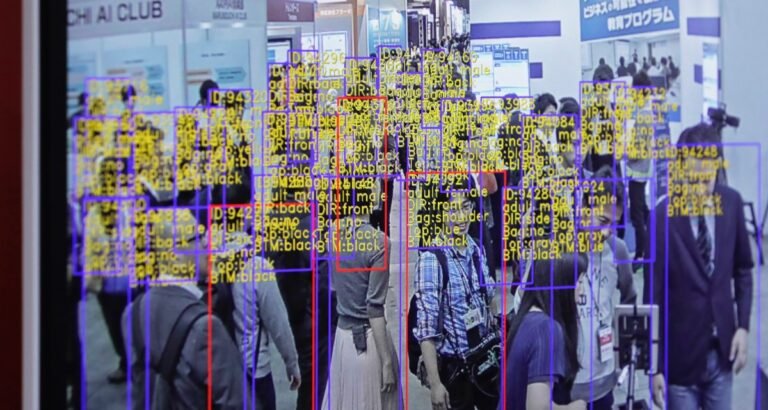

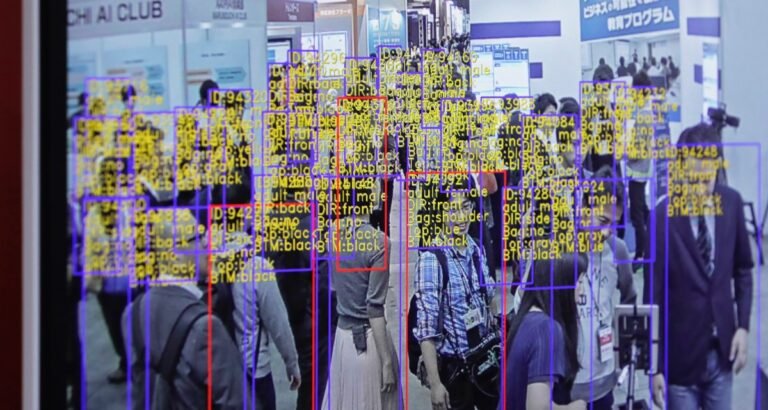

This week in AI, I’d like to turn the spotlight on labeling and annotation startups — startups like Scale AI, which is reportedly in talks to raise new funds at a $13 billion valuation.

Labeling and annotation platforms might not get the attention flashy new generative AI models like OpenAI’s Sora do.

Without them, modern AI models arguably wouldn’t exist.

Labels, or tags, help the models understand and interpret data during the training process.

Some of the tasks on Scale AI take labelers multiple eight-hour workdays — no breaks — and pay as little as $10.

YouTube is now requiring creators to disclose to viewers when realistic content was made with AI, the company announced on Monday.

YouTube says the new policy doesn’t require creators to disclose content that is clearly unrealistic or animated, such as someone riding a unicorn through a fantastical world.

It also isn’t requiring creators to disclose content that used generative AI for production assistance, like generating scripts or automatic captions.

They will also have to disclose content that alters the footage of real events or places, such as making it seem as though a real building caught on fire.

Creators will also have to disclose when they have generated realistic scenes of fictional major events, like a tornado moving toward a real town.

This sudden change by Twitter is likely in response to criticism the social media platform has faced in recent months over its transparency policy. Blue mirrors, which are used to…

The study found that while many of the top apps on the Play Store display labels indicating that the app collects anonymous or aggregate data, in reality, many of those…