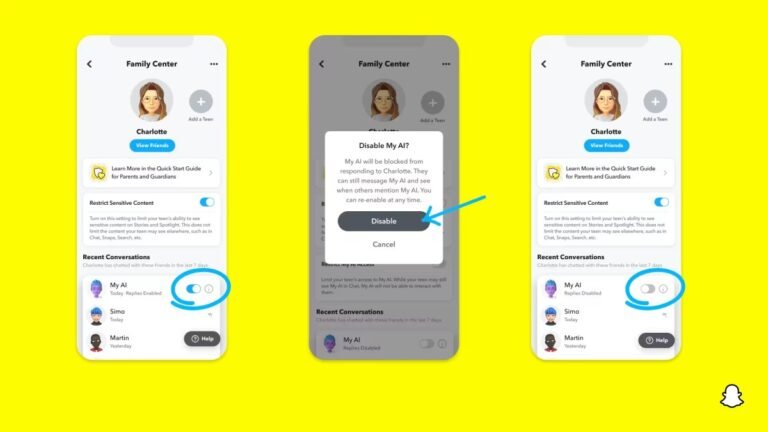

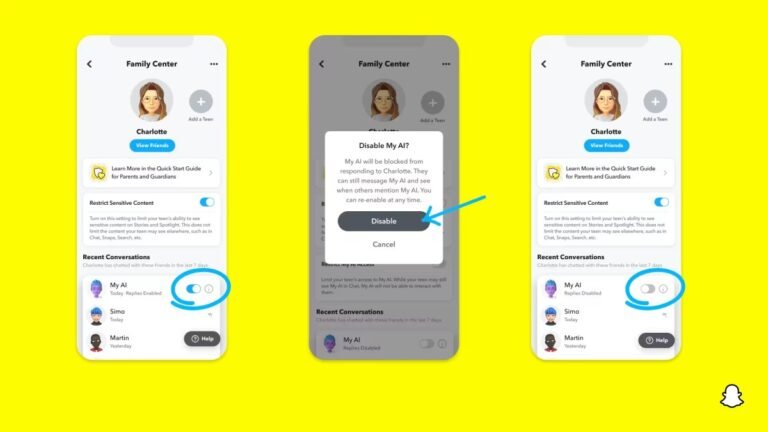

Snapchat is introducing new parental controls that will allow parents to restrict their teens from interacting with the app’s AI chatbot.

The changes will also allow parents to view their teens’ privacy settings, and get easier access to Family Center, which is the app’s dedicated place for parental controls.

Parents can now restrict My AI, Snapchat’s AI-powered chatbot, from responding to chats from their teen.

As for parents who may be unaware about the app’s parental controls, Snapchat is making Family Center easier to find.

Parents can now find Family Center right from their profile, or by heading to their settings.

AI aides nation-state hackers but also helps US spies to find them, says NSA cyber directorNation state-backed hackers and criminals are using generative AI in their cyberattacks, but U.S. intelligence is also using artificial intelligence technologies to find malicious activity, according to a senior U.S. National Security Agency official.

“We already see criminal and nation state elements utilizing AI.

“We’re seeing intelligence operators [and] criminals on those platforms,” said Joyce.

“On the flip side, though, AI, machine learning [and] deep learning is absolutely making us better at finding malicious activity,” he said.

“Machine learning, AI, and big data helps us surface those activities [and] brings them to the fore because those accounts don’t behave like the normal business operators on their critical infrastructure, so that gives us an advantage,” Joyce said.

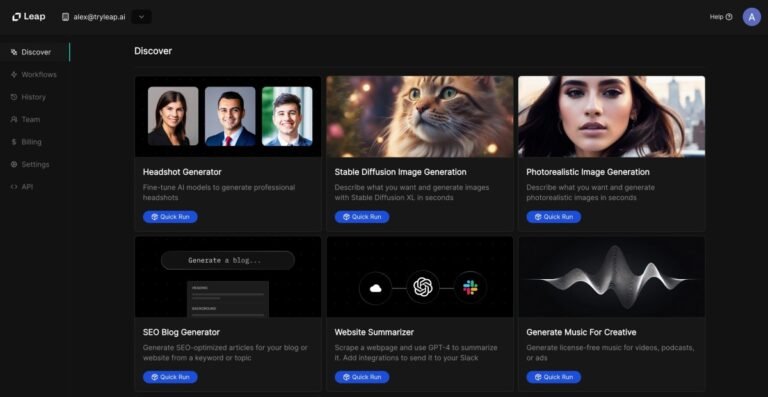

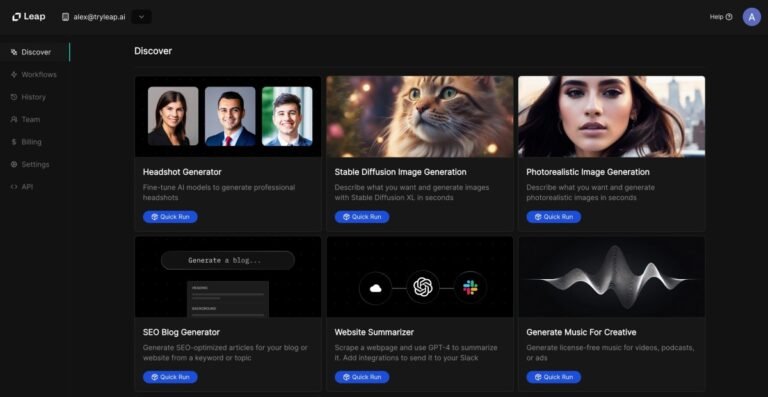

Leap AI is building a solution for these companies to easily integrate AI-powered workflows or even build their own using an easy process.

It also has plans starting from $29 per month with more credits, no limit on building workflows with customer support.

Why did the founders build Leap AI?

Another unique aspect is that we provide the interoperability between multiple models, multiple vendors, and multiple companies,” he said.

Leap AI is also working on improving context awareness of its workflows so it can leverage previously generated results.

YouTube is updating its harassment and cyberbullying policies to clamp down on content that “realistically simulates” deceased minors or victims of deadly or violent events describing their death.

The policy change comes as some true crime content creators have been using AI to recreate the likeness of deceased or missing children.

In these disturbing instances, people are using AI to give these child victims of high profile cases a childlike “voice” to describe their deaths.

In recent months, content creators have used AI to narrate numerous high-profile cases including the abduction and death of British two-year-old James Bulger, as reported by the Washington Post.

TikTok’s policy allows it to take down realistic AI images that aren’t disclosed.

In October, Box unveiled a new pricing approach for the company’s generative AI features.

Instead of a flat rate, the company designed a unique consumption-based model.

Each user gets 20 credits per month, good for any number of AI tasks that add up to 20 events, with each task charged a single credit.

If the customer surpasses that, it would be time to have a conversation with a salesperson about buying additional credits.

Spang says, for starters, that in spite of the hype, generative AI is clearly a big leap forward, and software companies need to look for ways to incorporate it into their products.

The New York Times is suing OpenAI and its close collaborator (and investor), Microsoft, for allegedly violating copyright law by training generative AI models on Times’ content.

Actress Sarah Silverman joined a pair of lawsuits in July that accuse Meta and OpenAI of having “ingested” Silverman’s memoir to train their AI models.

As The Times’ complaint alludes to, generative AI models have a tendency to regurgitate training data, for example reproducing almost verbatim results from articles.

And that’s why most [lawsuits like this] will probably fail.”Some news outlets, rather than fight generative AI vendors in court, have chosen to ink licensing agreements with them.

In its complaint, The Times says that it attempted to reach a licensing arrangement with Microsoft and OpenAI in April but that talks weren’t ultimately fruitful.

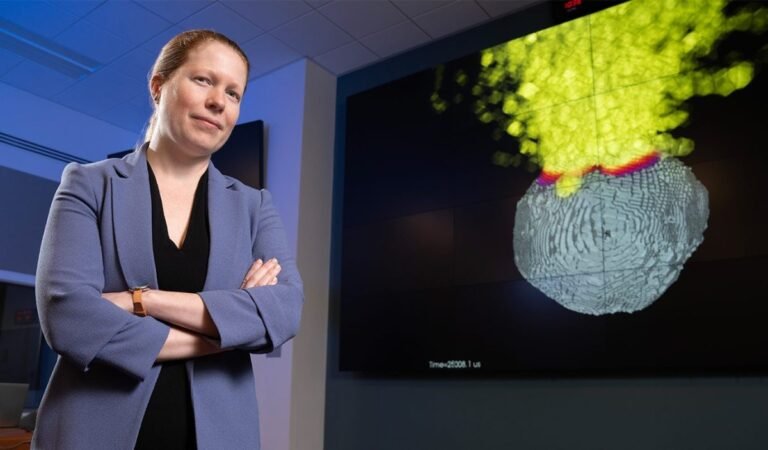

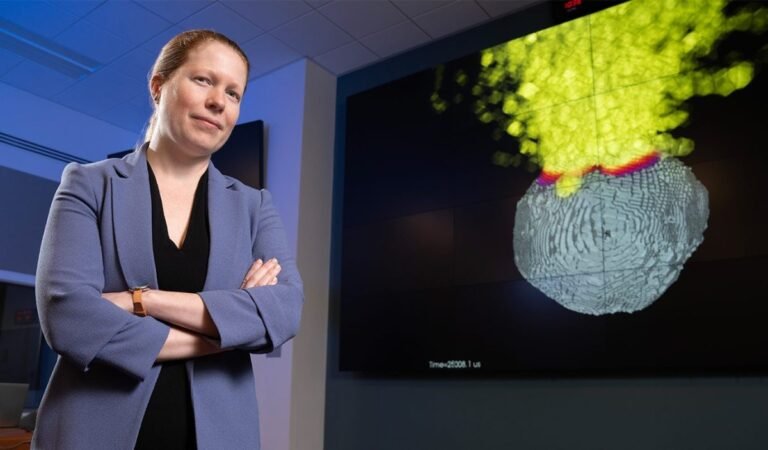

As if last year’s fabulous Dual Asteroid Redirection Test firing a satellite bullet into an asteroid wasn’t enough, now researchers are doing detailed simulation of the nuclear deflection scenario envisioned in 1998 space disaster film Armageddon.

At Lawrence Livermore National Lab, a team led by Mary Burkey (above) presented a paper that moves the ball forward on what is in reality a fairly active area of research.

The problem is that a nuclear deflection would need to be done in a very precise way or else it could lead (as it did in Armageddon) to chunks of the asteroid hitting Earth anyway.

In particular, understanding whether or not an attempted deflection mission will break apart an asteroid has been a long-standing question in the planetary defense community.

Every detailed, high-fidelity simulation and every broad sensitivity sweep brings the field closer to understanding how effective nuclear mitigation would be.

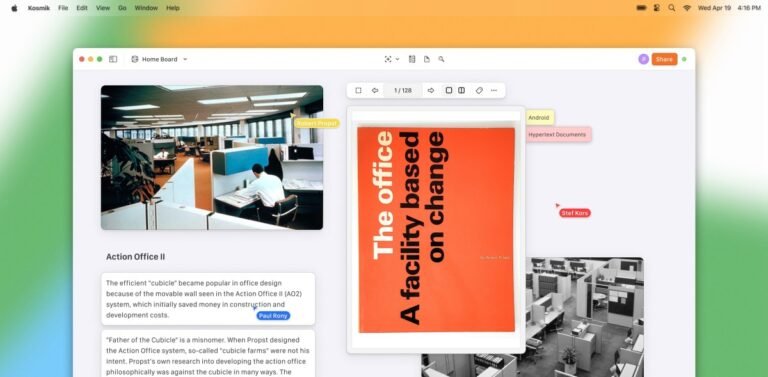

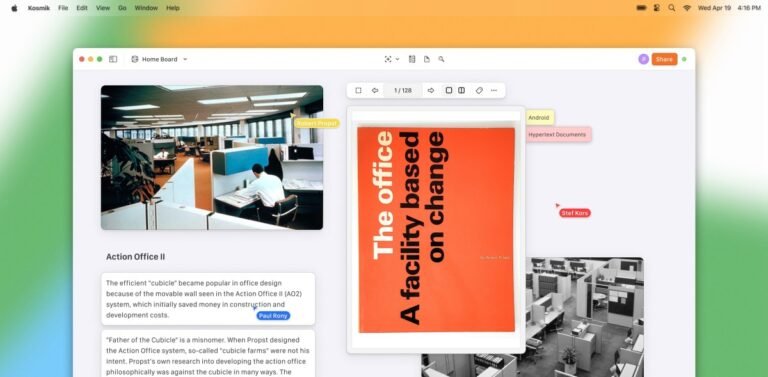

Kosmik was founded in 2018 by Paul Rony and Christophe Van Deputte.

And that’s when he started to build Kosmic, Rony told TechCrunch, drawing on a prior background in computing history and philosophy.

It also features a built-in browser, saving users from having to switch windows when they need to find a relevant website link.

Additionally, the platform also sports a PDF reader, which lets the user extract elements such as images and text.

“I think that everything revolves around the idea that we do not have the best web browser, text editor or PDF reader,” Rony said.

Tesla’s fix for its Autopilot recall of more than 2 million vehicles is being called “insufficient” by Consumer Reports, following preliminary tests.

While the testing isn’t comprehensive, it shows questions remain unanswered about Tesla’s approach to driver monitoring — the tech at the heart of the recall.

It focused heavily on the Autosteer feature, which is designed to keep a car centered in a lane on controlled-access highways, even around curves.

But NHTSA said in documents released last week that it viewed those checks as “insufficient to prevent misuse.”Tesla does not restrict the use of Autosteer to controlled-access highways, though.

“None of this is very prescriptive or explicit in terms of what it is they’re going to [change],” Funkhouser says.

With the FTC’s increasing focus on the misuse of biometric surveillance, Rite Aid fell firmly in the government agency’s crosshairs.

And companies such as Clearview AI, meanwhile, have been hit with lawsuits and fines around the world for major data privacy breaches around facial recognition technology.

The FTC’s latest findings regarding Rite Aid also shines a light on inherent biases in AI systems.

Additionally, the FTC said that Rite Aid failed to test or measure the accuracy or their facial recognition system prior to, or after, deployment.

“The allegations relate to a facial recognition technology pilot program the Company deployed in a limited number of stores,” Rite Aid said in its statement.