ChatGPT and GPT-3 models, used in conjunction with OpenAI’s classifier, were able to distinguish between human-written and AI generated text with a success rate of around 26%. While this tool is not ideal, it could be useful in preventing AI text generators from being abused.

There are several limitations to the tool that has been released. One is that it still cannot differentiate between human and AI generated text with 100 percent accuracy. However, it can be used as a supplementary tool to help determine if a piece of text is artificial or not. It may be useful for journalists who want to know the source of an article, for example, or for organizations who are concerned about false claims of AI-generated content.

One of the most important factors to consider when it comes to safeguarding against harmful effects of text-generating AI is trust. If the users of such tools do not have faith that the AI will produce accurate content, they may be less likely to use it and more inclined to rely on human reporters for news. Additionally, if users are unsure about how well ChatGPT works, they may be more likely to submit gibberish or nonsensical answers in order to trick the tool into generating interesting text. These concerns are serious and merit consideration by thosebehindtext-generating AItools

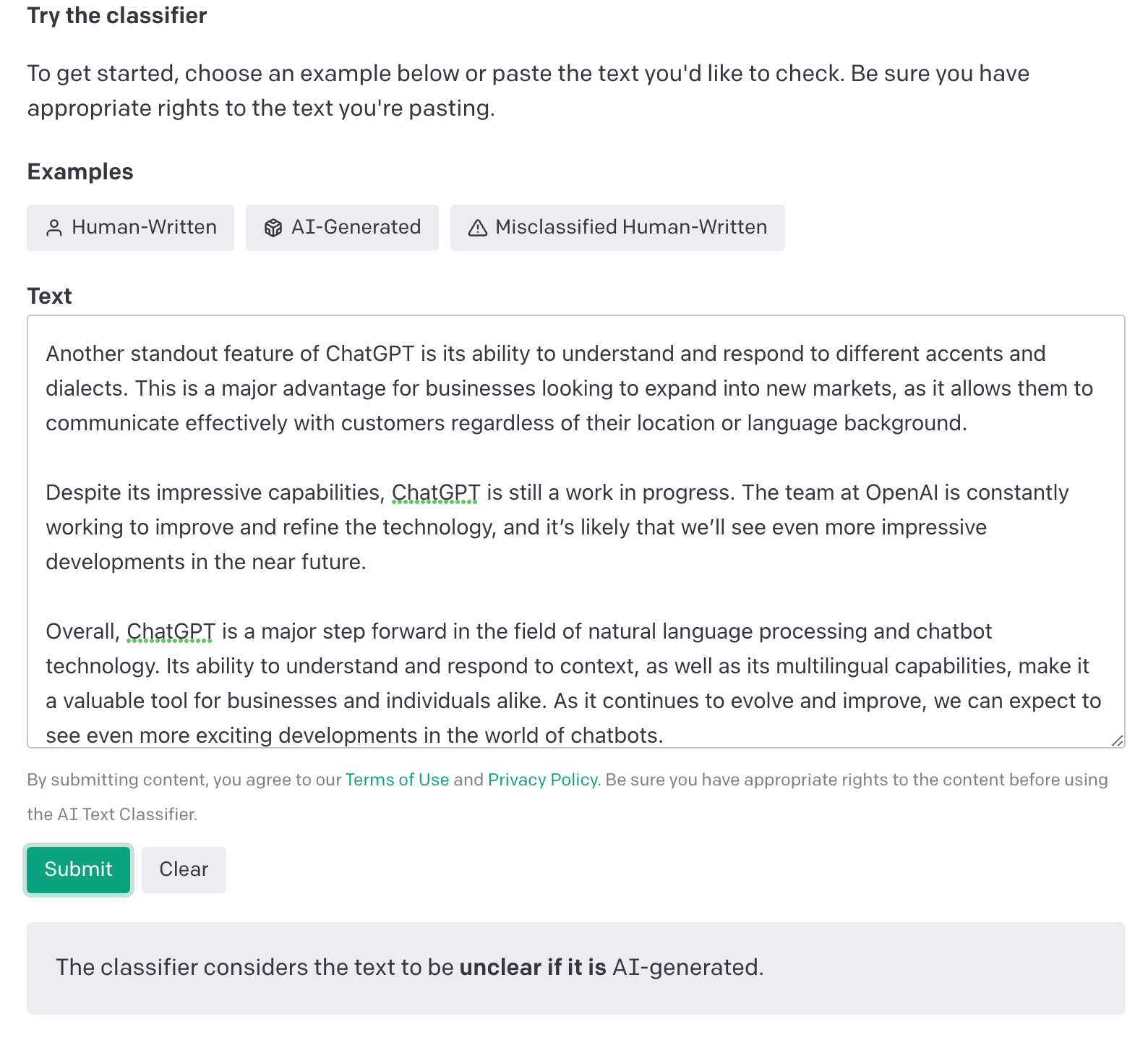

OpenAI’s AI Text Classifier is an interesting architectural addition to chatbots. It is designed to predict how likely it is that a piece of text was generated by AI, not just from ChatGPT, but any text-generating AI model. This fine-tuned prediction ability could be valuable for 3rd party developers looking to build more comfortable, natural chatbots.

OpenAI’s recently-published text classifier is impressively accurate in identifying different human written samples. The algorithm is able to correctly identify similar human-written texts from Wikipedia, websites extracted from links shared on Reddit, and even “human demonstrations” collected for a previous OpenAI text-generating system. Given the proliferation of AI-generated content on the internet, this instrument could be used to flag potentially fake or misleading content.

The OpenAI Text Classifier is an amazing tool that can be used to identify text written in a variety of different languages. This is especially helpful when it comes to detecting plagiarism, as the classifier is not likely to mistake copied text for original work. Additionally, the classifier can have difficulty recognizing text written by children or in a language other than English, as its data set includes only English-language texts.

Probably not artificial intelligence is at work here – the phrase ‘useful idiot’ is an old Russian one, and could more accurately be translated as ‘dupe’. This isn’t an AI-generated message – it’s something someone has deliberately written to mislead or deceive.

Given that ChatGPT is still in its early days, it’s likely that there will be some growing pains as developers continue to experiment with the platform. Despite this, ChatGPT has a lot of potential and could potentially revolutionize how we communicate online.

Some people worry that artificial intelligence will one day take over the world and control all of us. Others believe that AI will help us achieve amazing things we never could before. I’m in the middle of the fence on this issue. I believe that AI can be used for good, but also understand how it could be abused if not monitored carefully. We need to make sure that as AI develops, we keep an eye on how it’s being used and make sure it’s not harming humans or our environment.

In the aftermath of the AI economic collapse, many people are worried about what will happen to society. Some claim that we need to get

Classifiers are popular tools in machine learning and they can be used to assess the probability of a given sentence being positive or negative. The model achieved 83% accuracy (of all sentences) when it was tested on a corpus of 5000 training examples, but is less accurate when applied to shorter pieces, such as emails. In order for the classifier to work effectively, there must be enough context present for it to make accurate predictions about the sentiment of the text.

While the current classifier is helpful in identifying fraudulent submissions, it is not foolproof. Fraudsters are well aware of the limitations of this classifier, and will continue to exploit it. More sophisticated algorithms are needed to identify truly fraudulent submissions.

How can we be sure that the AI-generated text we are reading is not a hoax? Some apps, such as ChatZero, use criteria including “perplexity” (the complexity of text) and “burstiness” (the variations of sentences) to detect whether text might be AI-written. This can be difficult task; for example, an exemplary statement could be: “I am perplexed by your statement.”

Text-generating AI is only getting more accurate, and security researchers will have to find ever more creative ways to catch it in the act. In the meantime, classifiers can be helpful in certain circumstances but will never be able to provide a 100% confirmation that text was actually created by an AI.

AI-generated text presents a number of challenges for humans, developers, and designers. It can be difficult to understand and format, and it can be jarring when it clashes with traditional writing styles. In general, AI-generated text requires more attention to detail than typical writing.